I call this process the “Machine Learning Loop” — that hugely important and often forgotten connection at the end of the machine learning development pipeline where we cycle back to the beginning to incorporate feedback into the system.

In other words, it’s all about the iteration.

What do we mean by iterations? Machine learning models are never really “done.” They need to ingest new data to learn new insights, and that’s why we need to think of the process as a loop rather than a straight line forward. The process starts over as new data flows into the system, forming a continuous, virtuous circle of model creation and deployment steps.

And this ability to iterate (“completing the loop”) is the process that’s arguably broken in machine learning/data science today. That’s because it usually relies on a multitude of manual steps that are highly error-prone, and baking them all into a robust, production platform is even harder.

To get to a complete Learning Loop we need to figure out not only how to perform iterations, but also how to iterate gracefully, consistently, and reliably as we improve our ML-based systems.¹

Now, let’s look at how we get there.

Capability vs. Ability

In the field of AI, you’ve got to separate capability and ability. For instance, I may have the capability of becoming a decent piano player, but my ability to play “La Campanella” is limited by the amount of time I spend practicing.

In the case of speech recognition, my transformer architecture may have the capability of learning a variety of accents and languages, but my model’s ability to do so is very much dependent on how it was trained and the data that it’s learning from.

Machine learning is a key component of many businesses and organizations, relying on models to provide insight, improve quality, and reduce costs, yet without data it’s useless — the AI remains a capability. Data is how we turn AI capability into ability.

Data is one of the biggest bets of the century, and it’s a good one. Each interaction with Siri is used to make “her” better. Text and email messaging services are learning to automate our responses. The pictures we post on Instagram help platforms personalize ads to us. Our interactions with everything around us are bursting with information about our habits, location, preferences, and intentions. Data is a tremendous resource, but capturing it and rendering it usable is today’s equivalent of the oil mining and refining process of centuries past.

But data is different from natural resources. Data is a dynamic, growing, living resource with many sources. It changes. Even in academia, new ImageNet datasets and challenges are released every few years because real world data and needs change.

And with every change, the “learning software” must learn from the new information.

The Data Science Process

Many companies with data science teams have structured the data science process as a one time thing — a model development phase with a series of offline, sequential steps requiring multiple handoffs and manual processes and then a production phase, whereby that model is deployed into an online software application.

But once you have a model in production, you quickly realize that your model will require iteration — production data is always different from training data. For me, in speech recognition there would always be a new accent, a different type of noisy environment, or even new words to incorporate into the model’s vocabulary.

As an example, just a few years back, my team was analyzing popular topics in the news with a speech recognition model and found a large increase in an odd phrase, “bread sit.” Once we realized that this output was the closest match in our model for a brand new word, “Brexit,” we had to quickly figure out how to incorporate this new term into our production model’s vocabulary.

The only constant in machine learning and the real world is change.

Software Development: Two Life Cycles Diverge in the Woods

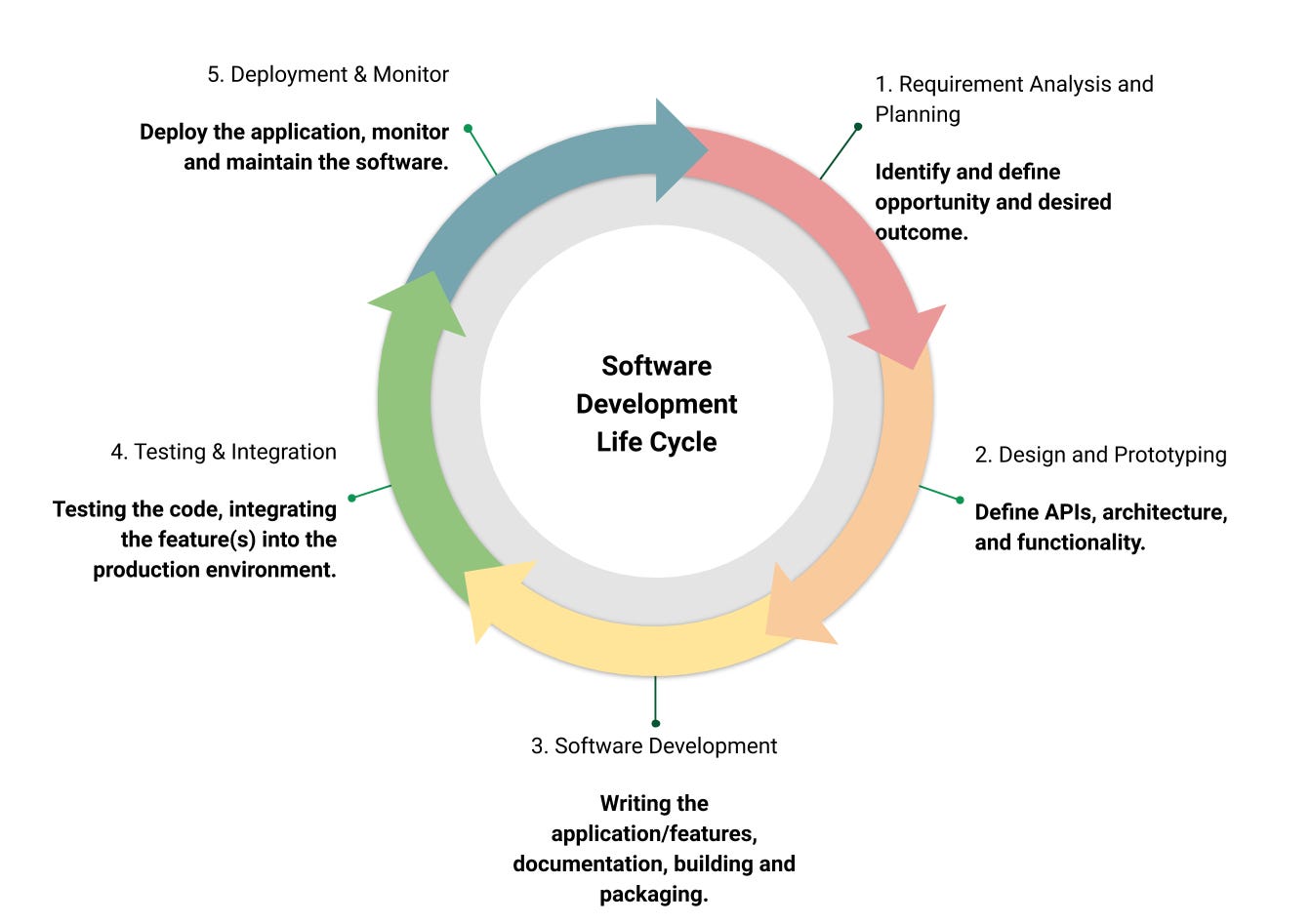

At first glance the Machine Learning Loop that we’re describing sounds very similar to the software development life cycle (SDLC) — a logical process that aims to produce high-quality software through a series of development stages. Completing the loop is done by incorporating feedback from a new feature into the planning of future iterations.

Conceptually, the SDLC is useful in describing what to consider during each stage of development, but it doesn’t prescribe how to execute this process effectively. We could perform each of these stages one at a time manually, however, the more manual the process, the less reliable and agile iterations can be.

If we look at how software development has progressed practically, we see an incredible amount of tooling and automation to aid the SDLC. Manual handoffs have been removed by merging development and operations, and quality has improved significantly by having frequent releases and feedback channels. This cultural shift in software development has been centered around DevOps.

For those that don’t know, DevOps is a culture and practice of combining development and operations (Development + Operations = DevOps). The adoption of DevOps has led to shorter development cycles, increased deployment speed, and improved release dependability, primarily through CI/CD (Continuous Integration & Continuous Deployment). DevOps places a strong emphasis on frequently testing and releasing, so that severe changes are not discovered right before software is deployed. The key developments that were needed for the shift to DevOps to succeed were:

- Version control — managing code in versions, tracking history, roll back to a previous version if needed

- CI/CD — automated testing on code changes, remove manual overhead

- Agile software development — short release cycles, incorporate feedback, emphasize team collaboration

- Continuous monitoring — ensure visibility into the performance and health of the application, alerts for undesired conditions

- Infrastructure as code — automate dependable deployments

The testing piece is crucial to ensure that frequent changes aren’t sacrificing quality by introducing bugs into your software. When we look at how DevOps is applied to Software Development, it looks something like the figure below.

- We start with the code repository, adding unit tests to ensure that the addition of a new feature doesn’t break functionality.

- Code is then integration tested. That’s where different software pieces are tested together to ensure the full system functions as expected.

- The system is then deployed and monitored, collecting information on user experience, resource utilization, errors, alerts and notifications that inform future development.

DevOps was a huge win in the software development world, relying heavily on automation to improve quality. These practices and tools work amazingly for code, but what about machine learning development and data?

The Two Loops

Machine learning development is not the same as software development. The most important difference is that we are now dealing with two moving pieces: Code and Data.

And rather than iterating through the development process with the SDLC, we are actually working with two loops: a code loop which has a symbiotic relationship with the data loop.

The two loops is a conceptual depiction of machine learning development, much like the SDLC is for software development. While the software development life cycle is active in the code loop to produce high-quality software, the product of the data loop must be high-quality, reliable data ready to be combined with ML code to produce production ML pipelines and models.

The better the coupling between these two loops, the more quickly and reliably iterations can occur. Ideally, each of these loops can be loosely coupled and iterated on simultaneously. In software development, a library or project can be created and released with a regular cadence. Feature development can continue, while the decision of what version of the library should be used in a software is left to its users. We would like to iterate on data in the same way — datasets released with a regular cadence, updated frequently, leaving it up to the ML system and data scientists to incorporate the appropriate version. In essence, we want to be able to treat data like software.

Data: The new source code

ML code, just like in traditional software development needs to be versioned, reliable, and tested. However, although it requires similar attention, data is quite different from code:

- Data is bigger in size and quantity

- Data grows and changes frequently

- Data has many types and forms (video, audio, text, tabular, etc.)

- Data has privacy challenges, beyond managing secrets (GDPR, CCPA, HIPAA, etc.)

- Data can exist in various stages (raw, processed, filtered, feature-form, model artifacts, metadata, etc.)

Not only is data different, but we have a new stage in the development process — the model training stage, or data+code. This is where ML code is combined with our data to produce an artifact. This process needs to be reliable and reproducible if we want to be able to create high quality models and deploy in an efficient manner.

Data Bugs

With ML code, logic is no longer manually coded, but learned. The subtle thing about this new training stage is that it actually makes the quality of our data just as important as the quality of our code. That also means that bugs in our model can be the result of either bugs in code or in data.

We see more and more examples of data bugs in the news every day. In many institutions, AI Red Teams are forming to find vulnerabilities and bugs within ML models before they’re deployed, because these types of bugs are incredibly costly, leading to horrible reputational damage, damage to the bottom line, or even physically dangerous, life and death situations.

- Placing stickers on the road convinced Tesla’s Autopilot into switching lanes (Adversarial and Perturbation attacks)

- GPT-3 Exhibits Racial and Gender bias (Data bias and skew)

- Microsoft Twitter Bot quickly became racist (Poisoning attacks)

- Google image recognition system mislabeled black woman as gorilla (Class imbalance/lack of proper data coverage)

The key thing to see here is that every single one of these mistakes were caused by data bugs not code problems. If the data is bad it doesn’t matter how good your code was.

Biased data is buggy data — if it’s unreliable or doesn’t represent the domain then it’s a bug.

Just as we test software for bugs in code, we have to test our data for bugs too. That means that in machine learning systems, the testing and monitoring structure should look similar to this:

We can see the new “Model Training” stage in the middle here taking in training data, combining it with our ML code, and producing models and software to the Running System.

As before, all of the software development considerations remain (with additional integration tests needed for the model training environment), but we now must place just as much emphasis on the data tests. Just as our code should be tested before it is ready to deploy, data tests should ensure that we have high quality data, that our model can learn from it effectively, and that we can iterate on it to improve it.

The data aspect is so important that, Andrew Ng, one of the most well known names in machine learning — Stanford professor, co-founder of Google Brain, Chief Scientist at Baidu, and many other accolades — points to data as the key way to bridge the gap between research and production for machine learning:

“Keeping the algorithm fixed and varying the training data was really, in my view, the most efficient way to build the system.” — Andrew Ng

This is the “what” we need to bring to ML development, but how do we do it? What tools will enable us to do it right?

In ML systems, we need to be able to embrace data changes. DevOps principles need to be applied effectively to code, data, model training, pipeline deployment, and many other processes to facilitate rapid iteration. This is the budding field of MLOps.

MLOps: DevOps for Machine Learning

Similar to DevOps, MLOps is the developer-centric approach to managing machine learning software. DevOps for a Software 2.0 world. It focuses on the proper way to manage the full life cycle of machine learning development and the tooling needed.

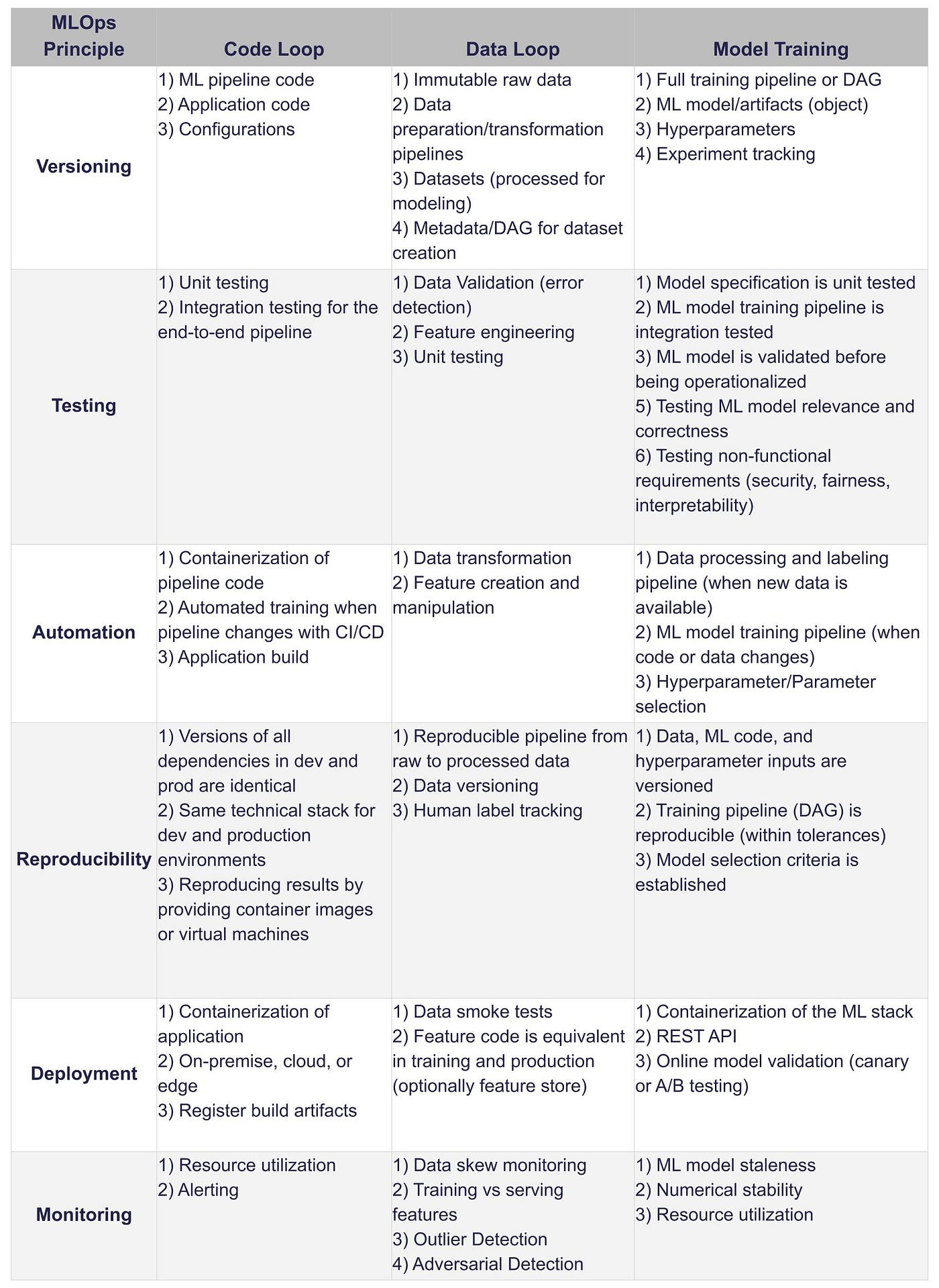

Functionally, the practice of MLOps focuses on incorporating testing, automation, and monitoring into all the stages of development, as shown in the chart below. Numerous considerations go into this process, and there are many tools and blogs that focus on each aspect: some for monitoring and pipelining, some for model training and deployment, and others for data curation and exploration.

The intricacies around the data loop are typically overlooked in many organizations. More importantly, the connections between the data loop and the code loop are crucial to being able to roll back deployments when changes negatively affect the production system. We need to manage the code loop, which DevOps tooling can support, while also supporting the data loop and data+code interactions. The platform that completes the Learning Loop will not only become the canonical stack for machine learning, but also provide the catalyst to Software 2.0.

It will take time for the canonical stack to come together. Despite the tremendous advances in the last decade, machine learning is still very much an early adopter technology. The tools are rapidly evolving and changing. Even the categories are shifting. A year ago few talked about feature stores and now more and more companies are taking a hard look at bringing them in house. Terms like MLOps barely existed a few years ago and now they’ve caught on and become standard terms everyone uses. That’s how a complex ecosystem evolves, slowly at first and then it congeals quickly when enough of the core elements are in place.

In the next few years, we’ll likely see a suite of tools forming the canonical stack, similar to the way the LAMP or MEAN stack formed in the web development space. What will be the MEAN stack of AI/ML? The tools that develop fastest and solve the most real world problems for data scientists and data engineers will gain the most traction, making it harder and harder for rivals to outflank them. Almost overnight it will seem like everyone is using the same three or four tools, but as they say in the movie business “it only takes a decade to become an overnight success.” The stack will seem like it formed overnight, but really, it’s taken many years and lots of competition to gel together into something comprehensive.

Eventually it may congeal even further into one nearly complete, end to end stack but that’s many years away.

Practical MLOps: Pachyderm for data and code+data

There’s too many variables to safely make a call on what software components will come together to solve all of today’s big challenges in data science. That’s why for now, we’re going to take a look at one foundational piece of the Learning Loop, rather than try to predict all the pieces of what a final stack will like now.

One piece that stands our more to me than any other is the problem of data. We’ve talked a lot about the need to treat data differently in machine learning environments and few tools in the space have really focused on creating a powerful, scalable, version controlled, data management system with Git like tracking capabilities.

In my data science journey I turned to Pachyderm to solve the complex conundrum of data in AI/ML.

I joined Pachyderm this year because I’d struggled with the problem of data for many years as director of applied research in my previous role, and they’d gotten much further along than anyone in solving the challenges of data in machine learning. Having applied machine learning to a variety of problems (and even co-authoring a textbook on the topic), constantly working to handle the complexities that come with the Machine Learning Loop, and searching for tooling to aid in this process at an organizational level, I can say this strongly:

If the final data foundation of the MLOps stack isn’t Pachyderm, it will look a lot like it.

A core data management platform in the Learning Loop must treat data+code interactions as first class citizens. Pachyderm tracks changes in data (via commits) and triggers events for pipelines, making it truly data-driven. When data changes Pachyderm can kick off a new run of the Learning Loop. Most other pipeline systems simply see everything as a pipeline first, with data as a second class citizen, which is an outmoded carry-over from the standard DevOps software development lifecycle, where code is the centerpiece of what teams are producing. In machine learning, data is just as important as code. If you port over the old paradigm from software development it means that when data changes the system can’t easily kick off a new learning loop iteration. Instead, in pipeline first systems, you have to create a while loop to constantly check the data for changes which is highly inefficient in the data centric software development life cycle of the AI world.

When we look at how Pachyderm handles data, we see the solutions to the data loop issues we talked through earlier:

- In the case of how to handle changing data, Pachyderm treats data as git-like commits to versioned data repositories. These commits are immutable, meaning that data objects committed are not just metadata references to where the data is stored, but actual versioned objects/files so that nothing is ever lost. This means that if our training data is overwritten with a new version in a new commit, the old version is always recoverable, because it is saved in a previous commit.

- In the case of types and formats, data is treated as opaque and general. Any type of file: audio, video, csv, etc. — anything can be placed into storage and read by pipelines, making it generalizable and uncompromising, instead of simply focused on one kind of data the way a database focuses only certain kinds of well structured data.

- As for the size and quantity of data, Pachyderm uses a distributed object storage backend which can scale to essentially any size. In practice, it is based on object storage services, such as s3 or google cloud storage.

For the data+code stages, Pachyderm pipelines capture all interactions between code and data — with all output artifacts having a reference to the Docker-based pipeline, the data sources, and the versioned artifacts produced. The pipelines can be built using practically any data science tooling (placed in docker images that are run as a pod) and scaled to effectively any use case.

In another article I began to look at how DevOps and Pachyderm can work together with GitHub to introduce data and code tests into the same toolchain. With GitHub Actions managing the code loop and Pachyderm managing the data loop and code+data pipelines, we can maintain a separation of concerns, iterating on both independently, while still capturing the interactions reliably.

Pairing these tools provides graceful and reliable iteration and collaboration on the machine learning system, both from the code and data perspective. It has a single source of truth for the code as well as the data and any interactions between them are captured with Pachyderm’s data lineage capabilities.

There and Back Again

As we’ve seen throughout this article, data is a foundational element of the machine learning loop. It exists not as a single step before model development, but as a loop that we iterate through during the life cycle of our ML application, allowing our software to learn.

Improving our management of the learning loop is the work of MLOps. By focusing on testing, monitoring, and automation, we’re able to bridge the gap between traditional software development and software 2.0.

Completing the learning loop is fundamental in the progression of “software that learns.” When we look at how to “complete the machine learning loop” we see that there are new challenges that just don’t exist in the traditional software development model. Getting them right means the difference between a successful data science team and one that joins the 87% of data science projects that never make it to production..

The key to the Learning Loop is simple:

The unification of code and data.

No longer can we think of them as separate or one as more important than the other. In the new world of software 2.0 the two loops of iterating on code and iterating on data weave together to form the software development workflow we need to get models into production in the real world.

Managing the two loops (MLOps) means emphasizing testing and versioning for code, data, and their output products/artifacts, while also allowing each of these loops to move independently — fixing bugs in data shouldn’t be dependent on fixing bugs in code.

If you only focus on one over the other, you’ll find yourself constantly dealing with models that don’t stack up when they come against the chaos of production apps that have to deal with the messiness of people and problems and breakdowns in logic day in and day out.

But if you focus on code and data as the centerpiece of your machine learning loop then you’re on your way to building the systems and processes you need to be in that rarified air of data science teams that consistently deliver models that make a big difference in your business and in the world.

For some of my other related articles and work, see: scaling ML research pipelines or the Deep Learning for NLP and Speech.

If you’re interested in more information on using Pachyderm, check my simple Intro to Dataset and Model Versioning video, the Getting Started section of the Docs, or connect on Slack.

[1]: To be clear I’m not talking about online learning or continuous training when talking about the Machine Learning Loop. Before we can get there, we need to solve the base case of reliably incorporating feedback into our ML system. As the loop matures, it will naturally move into highly automated steps, but we can’t expect to make much progress on these fronts without first figuring out how to reliably incorporate feedback loops into our machine learning processes.